| Searches | March | April | May | June | Aug | Sept | Oct | |

| homeschool | 137,953 | +100 | 65th | 65 | 59 | |||

| home education | 4,530 | |||||||

| homeschool curriculum | 28,814 | 21 | 20 | 14 | 13 | 10 | ||

| homeschool material | 2,278 | 55 | 9 | 8 | 10 | |||

| homeschool online | 6,441 | 9 | 8 | 4 | 5 | |||

| homeschool resource | 5,607 | 16 | 22 |

Blorum.info: A blog+forum for discussions, often with myself, about how the digital media industry functions. Since you've wandered in, feel free to share some thoughts as comments on the blog. You might find a few insights. Please share a few too.

Sunday, July 30, 2006

Homeschool Keyphrase progress

Friday, July 14, 2006

Trackbacks on Blogger

Trackbacks are a little hard to understand. They are not intuitive. With some help from Adam, I've now figured what a trackback is and how to use it. Here's the steps:

1. Get set-up. Trackbacks are not built-in to blogger. You need a 3rd party tool. I use haloscan. Adam figured this all out and set it up for me.

2. Create an article and cite another blogs article. Here for instance, is a link to a comment on blogger trackbacks from haloscan's blog.

3. After publishing your article, login to haloscan and go to manage trackbacks and click send trackback ping and provide the url of the blog articles (cited and citee)

1. Get set-up. Trackbacks are not built-in to blogger. You need a 3rd party tool. I use haloscan. Adam figured this all out and set it up for me.

2. Create an article and cite another blogs article. Here for instance, is a link to a comment on blogger trackbacks from haloscan's blog.

3. After publishing your article, login to haloscan and go to manage trackbacks and click send trackback ping and provide the url of the blog articles (cited and citee)

Thursday, July 13, 2006

Robots.txt - Another lesson from SES Miami implemented

Vanessa explained to me that a sc****d up robots.txt file was probably not helping me. Then, seeing the truly blank look on my face, she explained to me what a robots.txt file was.... Then, she sighed and looked at her watch.....

In any case, I've been studying hard this week and I think I now understand...My google maps, http://www.time4learning.com/ › robots.txt analysis for http://www.time4learning.com/robots.txt says:

Last downloaded July 13, 2006 4:36:05 PM PDT Status 200 (Success)

This means that My site returned a "heres the content that you want" message for the request for a robots file. The report continues:

Parsing results, lue Result http://www.time4learning.com/robots.txt

robots.txt file does not appear to be valid

Then in the box, it has the source code for my default "page not found". Got it. :> I'm returning the wrong page without an appropriate error message. Since I don't know how to fix that (it requires involvement of my hosting company which is a non-starter....I know, I should move. I should also exercise more and not stare but.....), then I should at least create a proper robots.txt file and place it there. Which I've now done!

Then, the google test has a nifty test ability of a robots.txt file. What a shame I put it up prior to testing.....But then, since Vanessa actually wrote it for me at the show and I emailed it to myself, it was a reasonably safe bet.....Now I've tested it and it seems to be successful.

Check off another base covered....

In any case, I've been studying hard this week and I think I now understand...My google maps, http://www.time4learning.com/ › robots.txt analysis for http://www.time4learning.com/robots.txt says:

Last downloaded July 13, 2006 4:36:05 PM PDT Status 200 (Success)

This means that My site returned a "heres the content that you want" message for the request for a robots file. The report continues:

Parsing results, lue Result http://www.time4learning.com/robots.txt

robots.txt file does not appear to be valid

Then in the box, it has the source code for my default "page not found". Got it. :> I'm returning the wrong page without an appropriate error message. Since I don't know how to fix that (it requires involvement of my hosting company which is a non-starter....I know, I should move. I should also exercise more and not stare but.....), then I should at least create a proper robots.txt file and place it there. Which I've now done!

Then, the google test has a nifty test ability of a robots.txt file. What a shame I put it up prior to testing.....But then, since Vanessa actually wrote it for me at the show and I emailed it to myself, it was a reasonably safe bet.....Now I've tested it and it seems to be successful.

Check off another base covered....

Result Codes & Redirects

Thru the haze of 301s, 304s, 200s, and 404s; I can now discern some meaning.

It turns out there are two sets of issues: results & redirects. And the reason to confuse them is that they are the same but different. From my point of view, there are three topics which rely on these codes:

- trying to understand result from when we look for pages

- trying to redirect pages and domains in ways that are useful to users and search engines

- trying to configure the site so that apparently great solutions do not come back and get me in trouble (ie - right now I handle all "page not found" situations by giving them a default page with a "good-to-go" message. But, the search engines will not like the serving up of the identical page as correct from many different URLs.

Result Codes - The client asked the server and the server gave an answer:

result code 200 - the request for content is valid and I'm sending along the page

result code 304 - Not Modified (since last request from the same something?)

result code 404 - Not Found (but in addition to the result code, a page of some sort can be given?)

Redirects - Are page and domain redirects the same thing?

I have domains with great names that I want to redirect. Best way....

My hoster allows me to do something (redirect?) which I've done for:

http://www.Time4Math.com

http://www.Time4Reading.com

[open bracket

meta http-equiv="Refresh" content="5; url=http://www.newlocation.com/page.html">

quoted from: http://web.mit.edu/is/web/reference/webspace/redirects.html

Sources:

Vanessa sent me to: To err or not to err 200s vs 404s

I found Status Code Definitions

Initially, other than sheer disorientation and the coincidence of them being 3 digit numbers in the web world, there may been no reason to have confused redirects and error codes. But then, once i understand the difference, I have learned that they actually converge.

PS. 529s are neither redirects or result codes, they are state sponsored investment programs for higher education that are given special tax status. Somehow that made it onto my notes from talking with Vanessa Fox. Did she give me tax advice, is my hearing a problem, or am I that confused? Or, all of the above.

It turns out there are two sets of issues: results & redirects. And the reason to confuse them is that they are the same but different. From my point of view, there are three topics which rely on these codes:

- trying to understand result from when we look for pages

- trying to redirect pages and domains in ways that are useful to users and search engines

- trying to configure the site so that apparently great solutions do not come back and get me in trouble (ie - right now I handle all "page not found" situations by giving them a default page with a "good-to-go" message. But, the search engines will not like the serving up of the identical page as correct from many different URLs.

Result Codes - The client asked the server and the server gave an answer:

result code 200 - the request for content is valid and I'm sending along the page

result code 304 - Not Modified (since last request from the same something?)

result code 404 - Not Found (but in addition to the result code, a page of some sort can be given?)

Redirects - Are page and domain redirects the same thing?

I have domains with great names that I want to redirect. Best way....

My hoster allows me to do something (redirect?) which I've done for:

http://www.Time4Math.com

http://www.Time4Reading.com

[open bracket

meta http-equiv="Refresh" content="5; url=http://www.newlocation.com/page.html">

quoted from: http://web.mit.edu/is/web/reference/webspace/redirects.html

Sources:

Vanessa sent me to: To err or not to err 200s vs 404s

I found Status Code Definitions

Initially, other than sheer disorientation and the coincidence of them being 3 digit numbers in the web world, there may been no reason to have confused redirects and error codes. But then, once i understand the difference, I have learned that they actually converge.

PS. 529s are neither redirects or result codes, they are state sponsored investment programs for higher education that are given special tax status. Somehow that made it onto my notes from talking with Vanessa Fox. Did she give me tax advice, is my hearing a problem, or am I that confused? Or, all of the above.

Wednesday, July 12, 2006

Google PPC - Hints from the google gurus...

At SES Miami, in addition to the organic search people, I had time with Lina Goldberg & Ashley Buehn from the ppc team.

I use to be a bigtime adwords spender (see my google frig) but have reduced it to a much smaller spend in the last six months and done very little management of it. Face-to-face with the optimizers, this is what I learned.

I use to be a bigtime adwords spender (see my google frig) but have reduced it to a much smaller spend in the last six months and done very little management of it. Face-to-face with the optimizers, this is what I learned.

Negative Keywords - Use Them!!!

They will change your life. And your account.

- Lina Goldberg

Heres what I retained from the session (other than they are pretty nice to anybody who has earned a refrigerator).

1. Look up my terms and explore them using the adwords word term. Find negative keywords, use them. I had used some (ie for reading, I had negative keywords for Pennsylvania, UK, astrology, future, medical, public etc) but not alot. Lina says Use them Alot. Personally, while I see how it would improve my CTR, the real problem has always been conversions, not click-thrus and I think my ads make it clear that I'm a kids literacy tool, not a way of telling the future or of speed reading. But, I'll give it a try.

2. Get Organized. My learning PPC campaign is a messy disorganized hodgepodge. This makes analysis very difficult.

3. Try the nifty new adwords interface. This is a software package to download to use to analyze and manage my account. Still beta but pretty exciting they say.

I also campaigned for my pet peeves as changes.

1. I set up my campaign with the conversion tracking a mix of email sign-ups and customer registrations so the conversion data in the account is useless. I set up the sales count data accurately. But the adwords interface is setup so that to look at my conversion rate (the sales count variable) by keyword or campaign, I need to go to the report center and generate reports and analyse spreadsheets. Why can't I change the variables that the account shows in its default reports?

2. Effective analysis means being able to aggregate data across a number of different variables to get patterns and to find statistically meaningful data. A pool tool would allow you to do all the analysis that we now do using sorts and filters in spreadsheets. For more info, the pool tool.

1. I set up my campaign with the conversion tracking a mix of email sign-ups and customer registrations so the conversion data in the account is useless. I set up the sales count data accurately. But the adwords interface is setup so that to look at my conversion rate (the sales count variable) by keyword or campaign, I need to go to the report center and generate reports and analyse spreadsheets. Why can't I change the variables that the account shows in its default reports?

2. Effective analysis means being able to aggregate data across a number of different variables to get patterns and to find statistically meaningful data. A pool tool would allow you to do all the analysis that we now do using sorts and filters in spreadsheets. For more info, the pool tool.

Tuesday, July 11, 2006

Google Query Stats Questions - what does it mean?

I just lucked into the most fabulous opportunity - the SES Exhibit Hall in Miami featured a well-staffed google both and not that many visitors. I got Vanessa Fox's full attention for an hour. (If only I had been better prepared with questions - what an opportunity!!!)

I need to revisit this question about Top Search Queries.... What does it mean?

It's explained as: "Top search queries are the queries on the selected search property that most often returned pages from your site" ...over the previous three weeks.

Does this mean that:

a. These are the searches that my site ranks highest on. - I don't think so since I know there are searches where I am number one but which are not listed. Those are lower volume searches.

b. These are the highest volumes searches that returned my site in the results. If this is true, what is the cut-off that google is using to say that my site was "returned"? My table has seaches where the highest average position is 11.

I thought b. was the answer but Vanessa said that this couldn't be the whole story since she has seen tables where people are listed with positions as low as the 40s.

I've now thought of a third possible explanation...

c these are the highest volume searches where some minimum number of people actually clicked on my link.

This is the only explanation that I can think of that can explain this table.

This would require Google to:

1 - Find searches that people have clicked on my site

2 - Prioritize them by volume

Explanation C also makes sense because its using similiar data to whats in the second table but in a different way. I will test this by picking some huge volume term such as "learning games" or "homeschool" (where I place around 40th) and click on them and see if these terms hit the table. Stay tuned....

I'm also puzzling now over the term: "selected search properties". What does this peculiar phrase mean?

My blog with most of the notes from the conversation -http://learn-to-market-online116.blogspot.com/2006/07/vanessa-fox-at-ses-miami-google.html and my site http://www.Time4Learning.com

PS - my next personal project is to figure out how to use trackbacks on blogs and some other basics about blogging. I just sent this in to a newsgroup and google has let it go up: I'm also going to put it up on David Naylor's blog, is that too many?

I need to revisit this question about Top Search Queries.... What does it mean?

It's explained as: "Top search queries are the queries on the selected search property that most often returned pages from your site" ...over the previous three weeks.

Does this mean that:

a. These are the searches that my site ranks highest on. - I don't think so since I know there are searches where I am number one but which are not listed. Those are lower volume searches.

b. These are the highest volumes searches that returned my site in the results. If this is true, what is the cut-off that google is using to say that my site was "returned"? My table has seaches where the highest average position is 11.

I thought b. was the answer but Vanessa said that this couldn't be the whole story since she has seen tables where people are listed with positions as low as the 40s.

I've now thought of a third possible explanation...

c these are the highest volume searches where some minimum number of people actually clicked on my link.

This is the only explanation that I can think of that can explain this table.

This would require Google to:

1 - Find searches that people have clicked on my site

2 - Prioritize them by volume

Explanation C also makes sense because its using similiar data to whats in the second table but in a different way. I will test this by picking some huge volume term such as "learning games" or "homeschool" (where I place around 40th) and click on them and see if these terms hit the table. Stay tuned....

I'm also puzzling now over the term: "selected search properties". What does this peculiar phrase mean?

My blog with most of the notes from the conversation -http://learn-to-market-online116.blogspot.com/2006/07/vanessa-fox-at-ses-miami-google.html and my site http://www.Time4Learning.com

PS - my next personal project is to figure out how to use trackbacks on blogs and some other basics about blogging. I just sent this in to a newsgroup and google has let it go up: I'm also going to put it up on David Naylor's blog, is that too many?

vanessa fox at SES Miami - google insights for free!

I drove to the SES Miami conference today and found an underpopulated exhibit hall and a booth full of google employees ready to help by answering questions.

I drove to the SES Miami conference today and found an underpopulated exhibit hall and a booth full of google employees ready to help by answering questions.I cannot begin to describe my excitement.

A patient and knowledgeable Vanessa Fox fielded by questions which were too-often punctuated by, "Sorry, can you back up and explain...." She did backup and try again. thanks thank thanks.

Heres what I retained. I only wish I had a bigger brain, more time to prepare, and better note-taking....

1. My site is basically OK. My two tiered site map is fine, there's no clear advantage for me building & maintaining a site map, and there are no obvious red flags to worry about. But.....

2. Stop wasting time building internal links. Google doesn't count or weigh them. Internal links only count in so far as the spider uses them to find pages. It's only external links that give pages "page rank". (I asked "Is this "party line" or is it true?" She assured me it was true-true. Then she smiled at me. I believed her.)

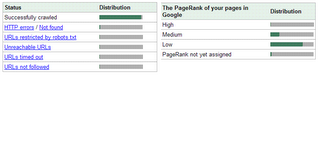

3. Stop wasting time obsessing over the page rank bar. Its even more useless than i thought. And while google iste maps will tell us the one page that is doing the best on our site, Vanessa will "not ever ever ever tell" what the relative page rank of any other internal page is. She said that my page crawl stats show a healthy distribution of page rank and that there are no real problems with timeouts (despite what the reportcard web site was telling me) or code errors.

4. Learn to use redirects. This has been a area of ignorance for me. Learn about 200, 404, 301s and how to use my excess domains thru redirects. Also, having no robot.txt file, I've been sending out my handling of a "page not found" which is a 200 and serving up a default page which people like but which doesn't much help the spider.

5 Build a robot.txt file which is easy peasy. Now its done!

6. Put no follow on my links to secure pages (ie https:) to save time for the spider.

7. Do not repeat text. If possible, have my new articles start on my site and then get republished so its clear that i'm the source. I've been doing this backwards by having a blog which I used for rough drafts of pages and then move to my site when they are well-written.

8 For keyphrases which the google crawl query stats list as a high volume search but which are not in the high volume clicks table, revisit how my listing is appearing on the google search to make it more attractive.

9. The page analysis shows some terms as not appearing often in my site but which I consider to be crucial. Use them more!

Read read read - http://www.google.com/support/webmasters/

http://gsitecrawler.com/articles/error-404-200.asp

http://google.com/support/webmasters/bin/answer.py?answer=40132&topic=8845

http://google.com/support/webmasters/bin/topic.py?topic=8843

http://www.mattcutts.com/blog/guest-post-vanessa-fox-on-organic-site-review-session/

http://groups.google.com/group/google-sitemaps

thanks thanks thanks thanks to vanessa fox

Are you coming back next year?

Sunday, July 09, 2006

Intermediate SEO blogging

I corresponded with Adam (who makes to-do lists) . Here's his answers (in italics) to my questions on blog software.

Here are some blog and non-blog related articles that I find very valuable

The Blogger's Primer. Key new points for me in Aaron Brazell's article: use of trackbacks (which I still don't really get), the focus on readers & audience (which frankly is not the intent of all blogs. Mine seem to have no readers but they still attract search engine attention and create links of value), and how points of blog netiquette.

http://www.seomoz.org/beginners.php - Good article, not focused on blogs. Did have an item that caught my eye: the "No follow" link - SEOmoz

http://webproworld.com/viewtopic.php?t=62690 - Another good starter overview, non-blog oriented

I also mentioned Feedburner in one article recently. Feedburner can help promote your blog and it takes about five minutes to set up– my advice would be to spend a few minutes and give it a shot, it’s one of those things that’s easier to make sense of why you need it after you’ve tried it.

- This is now done although I got stymmied in the set-up when feedburner said:

Advanced Publicity: Modifying your "Autodiscovery" settings

If you want to make your FeedBurner feed easier still for site visitors to subscribe to, you should modify the "autodiscovery" HTML tags in your Blogger site template...our Support Forums (link) explains how to modify the autodiscovery tags in Blogger.

And I quote the Feedback forum/blog link...."

Tip #1: Offer a single feed If a visitor comes to your site and sees a number of different chicklets ...."

woops. lost me right there. Whats a chicklet? and then when I got to Tip2, I understood less with its code for the chicklet rather than the burned feed....huh?

Here are some blog and non-blog related articles that I find very valuable

The Blogger's Primer. Key new points for me in Aaron Brazell's article: use of trackbacks (which I still don't really get), the focus on readers & audience (which frankly is not the intent of all blogs. Mine seem to have no readers but they still attract search engine attention and create links of value), and how points of blog netiquette.

http://www.seomoz.org/beginners.php - Good article, not focused on blogs. Did have an item that caught my eye: the "No follow" link - SEOmoz

http://webproworld.com/viewtopic.php?t=62690 - Another good starter overview, non-blog oriented

I also mentioned Feedburner in one article recently. Feedburner can help promote your blog and it takes about five minutes to set up– my advice would be to spend a few minutes and give it a shot, it’s one of those things that’s easier to make sense of why you need it after you’ve tried it.

- This is now done although I got stymmied in the set-up when feedburner said:

Advanced Publicity: Modifying your "Autodiscovery" settings

If you want to make your FeedBurner feed easier still for site visitors to subscribe to, you should modify the "autodiscovery" HTML tags in your Blogger site template...our Support Forums (link) explains how to modify the autodiscovery tags in Blogger.

And I quote the Feedback forum/blog link...."

Tip #1: Offer a single feed If a visitor comes to your site and sees a number of different chicklets ...."

woops. lost me right there. Whats a chicklet? and then when I got to Tip2, I understood less with its code for the chicklet rather than the burned feed....huh?

Friday, July 07, 2006

Which blog & tools are best for SEO purposes?

I've started to wonder whats the best blog software or setup to use if the goal is seo. I thought about this when I read Adam's (who makes to-do lists) colum this morning:

Start a blog and sign up for Feedburner.Put up a blog at www.yoursite.com/blog. It doesn't matter if you use WordPress, Blogger, or whatever blog platform you prefer, just make sure you host it on your site. Make a few posts about your site, what people can expect when it launches, and why your site will be unique. After your first post, sign up for a Feedburner account. Under 'Publicize' in your account make sure to sign up for 'Ping Shot'. This will notify blogging directories of your posts which hopefully will result in a few links to your site.

Start a blog and sign up for Feedburner.Put up a blog at www.yoursite.com/blog. It doesn't matter if you use WordPress, Blogger, or whatever blog platform you prefer, just make sure you host it on your site. Make a few posts about your site, what people can expect when it launches, and why your site will be unique. After your first post, sign up for a Feedburner account. Under 'Publicize' in your account make sure to sign up for 'Ping Shot'. This will notify blogging directories of your posts which hopefully will result in a few links to your site.

- Is it the blog software that matters such as blogger, wordpress, iblogs etc?

- Is it better or worse to host it on your own domain for SEO purposes?

- How good is the built-in ping from the software?

- Does manually using pingomatic help?

- Are blog communities a help or hindrance? (such as www.homeschoolblogger.com/EdMouse)

- What is this feedburner?

Tuesday, July 04, 2006

HTML Errors Do NOT matter - - - today.

like magic, this email arrived answering the question that I asked last week: HTML code errors & SEO - Do HTML errors matter?

Do Search Engines Care About Valid HTML?

(thank you Entire Web. More specifically, thanks Adam for the article and your help Getting Organized)

Like most web developers, I've heard a lot about the importance of valid html recently. I've read about how it makes it easier for people with disabilities to access your site, how it's more stable for browsers, and how it will make your site easier to be indexed by the search engines.

So when I set out to design my most recent site, I made sure that I validated each and every page of the site. But then I got to thinking – while it may make my site easier to index, does that mean that it will improve my search engine rankings? How many of the top sites have valid html?

To get a feel for how much value the search engines place on being html validated, I decided to do a little experiment. I started by downloading the handy Firefox HTML Validator Extension (http://users.skynet.be/mgueury/mozilla/) that shows in the corner of the browser whether or not the current page you are on is valid html. It shows a green check when the page is valid, an exclamation point when there are warnings, and a red x when there are serious errors.

I decided to use Yahoo! Buzz Index to determine the top 5 most searched terms for the day, which happened to be "World Cup 2006", "WWE", "FIFA", "Shakira", and "Paris Hilton". I then searched each term in the big three search engines (Google, Yahoo!, and MSN) and checked the top 10 results for each with the validator. That gave me 150 of the most important data points on the web for that day.

The results were particularly shocking to me – only 7 of the 150 resulting pages had valid html (4.7%). 97 of the 150 had warnings (64.7%) while 46 of the 150 received the red x (30.7%). The results were pretty much independent of search engine or term. Google had only 4 out of 50 results validate (8%), MSN had 3 of 50 (6%), and Yahoo! had none. The term with the most valid results was "Paris Hilton" which turned up 3 of the 7 valid pages. Now I realize that this isn't a completely exhaustive study, but it at least shows that valid html doesn't seem to be much of a factor for the top searches on the top search engines.

Even more surprising was that none of the three search engines home pages validated! How important is valid html if Google, Yahoo!, and MSN don't even practice it themselves? It should be noted, however, that MSN's results page was valid html. Yahoo's homepage had 154 warnings, MSN's had 65, and Google's had 22. Google's search results page not only didn't validate, it had 6 errors!

In perusing the web I also noticed that immensely popular sites like ESPN.com, IMDB, and MySpace don't validate. So what is one to conclude from all of this?

It's reasonable to conclude that at this time valid html isn't going to help you improve your search position. If it has any impact on results, it is minimal compared to other factors. The other reasons to use valid html are strong and I would still recommend all developers begin validating their sites; just don't expect that doing it will catapult you up the search rankings right now.

About the Author: Adam McFarland owns iPrioritize - to-do lists that can be edited at any time from any place in the world. Email, print, check from your mobile phone, subscribe via RSS, and share with others. John updated his thoughts on HTML Correctness in Feb 2007

Monday, July 03, 2006

Google Adwords - One million

I'm a huge fan of Google adwords. Reasons.

1. Last holiday season, they sent me a gift package that included a nifty usb memory key, a tiny google mouse (i gave to my daughter), a usb hub (ilan berkner quickly found a use for it), a USB light (really cool but not sure what to do with it), and a leather carrying case for it all.

2. This past week, I received a certificate suitable for framing (see attached) and....drum roll please.... a small refrigerator (I'll take a picture of it soon) suitable for a small office, bedroom, or cube.

3. I built my little business with adwords. BTW - I will admit that while I built my business with adwords and use adwords for alot of interesting research, I've never actually profitted from it. The problem is that I can't seem to get the cost per customer acquisition down below the value of the customer. So I built a nice small business from adwords but have been forced to look elsewhere to build profitable business.

Now I do maintain a flow of adwords-based traffic which I use to analyse different landing pages and key phrases and seasonality. Since google's adwords have a built in analysis tool and I've alot of history on the value of their traffic (apparently over one million leads), I have a good database to compare and analyse by results against. Let me think, at a dollar / click, I have now paid google one million dollars. OK. I admit. I don't pay $1 / click very often. There are only a few terms out there that I pay that much for. What are they? ooooooo, thats a big secret.

I also pruned and optimized my little adwords campaign to a size where it is profitable. But small. In any case, thanks google. I have just built a link to an authority site which much give credibility to this blog.

Trivia question. According to alexa, what are the number one and two sites by visitor time?

- yahoo

Site Architecture for SEO Purposes

Fact is, I'm such a bigger that there's so many basics which if, I could figure out or if someone would teach me, I'm sure that I could do much better in SEO. My biggest recent breakthrough is that I'm to #11 (from #12 where I sat for a month) on homeschool curriculum. Just a little more tailwind and I might make it onto the front page for google. ....then i move to (sing along)....Easy street.....(anybody seen Annie lately?)

Let's talk site architecture and sitemaps. (note, i'm not even ready to do a nifty google site map)

I have a site map that I set up so visitors could find what they were looking for, so the spiders could find all the pages, and so that I with my faulty memory could remember how I had organized my site. Pretty soon, my site map became too confusing and lengthy for visitors to find anything. I re-organized it and re-organized it. I started leaving off less important (for people) pages and soon, I couldn't remember what pages I had (vs pages I had just thought about making) without firing up the tools. Once I found myself creating a set of pages that when I started to put up, I found that I had written the month before (sad but true). So my new approach is that I have two site map pages...

- a site map that is indexed off most pages including the home page.

- a siteindex which has all of my pages listed.

Now, I think that I've figured out how the pros do it. I'm looking at a page belonging to some big seo-experts (I'm not sure if its secretive that they own it) in which they have both a human and computer oriented map on the same page but the computer one is hidden unless the humans click for more. Take a look on the left side under the label "additional resources"

http://www.socialstudieshelp.com/

Very clever. I wonder if google might somehow someday consider that hidden text and black-hattish.

Let's talk site architecture and sitemaps. (note, i'm not even ready to do a nifty google site map)

I have a site map that I set up so visitors could find what they were looking for, so the spiders could find all the pages, and so that I with my faulty memory could remember how I had organized my site. Pretty soon, my site map became too confusing and lengthy for visitors to find anything. I re-organized it and re-organized it. I started leaving off less important (for people) pages and soon, I couldn't remember what pages I had (vs pages I had just thought about making) without firing up the tools. Once I found myself creating a set of pages that when I started to put up, I found that I had written the month before (sad but true). So my new approach is that I have two site map pages...

- a site map that is indexed off most pages including the home page.

- a siteindex which has all of my pages listed.

Now, I think that I've figured out how the pros do it. I'm looking at a page belonging to some big seo-experts (I'm not sure if its secretive that they own it) in which they have both a human and computer oriented map on the same page but the computer one is hidden unless the humans click for more. Take a look on the left side under the label "additional resources"

http://www.socialstudieshelp.com/

Very clever. I wonder if google might somehow someday consider that hidden text and black-hattish.

Subscribe to:

Posts (Atom)